FAIR Simple Scalable Static Research Data Repository

2019-11-05

This presentation was given by Peter Sefton & Michael Lynch at the eResearch Australasia 2019 Conference in Brisbane, on the 24th of October 2019.

Welcome - we’re going to share this presentation. Peter/Petie will talk through the two major standards we’re building on, and Mike will talk about the software stack we ended up with.

This project is about building highly scalable research data repositories quickly, cheaply and above all sustainably by using Standards for organizing and describing data.

We had a grant to continue our OCFL work from the Australian Research Data Commons. (I’ve used the new Research Organisation Registry (ROR) ID for ARDC, just because it’s new and you should all check out the ROR).

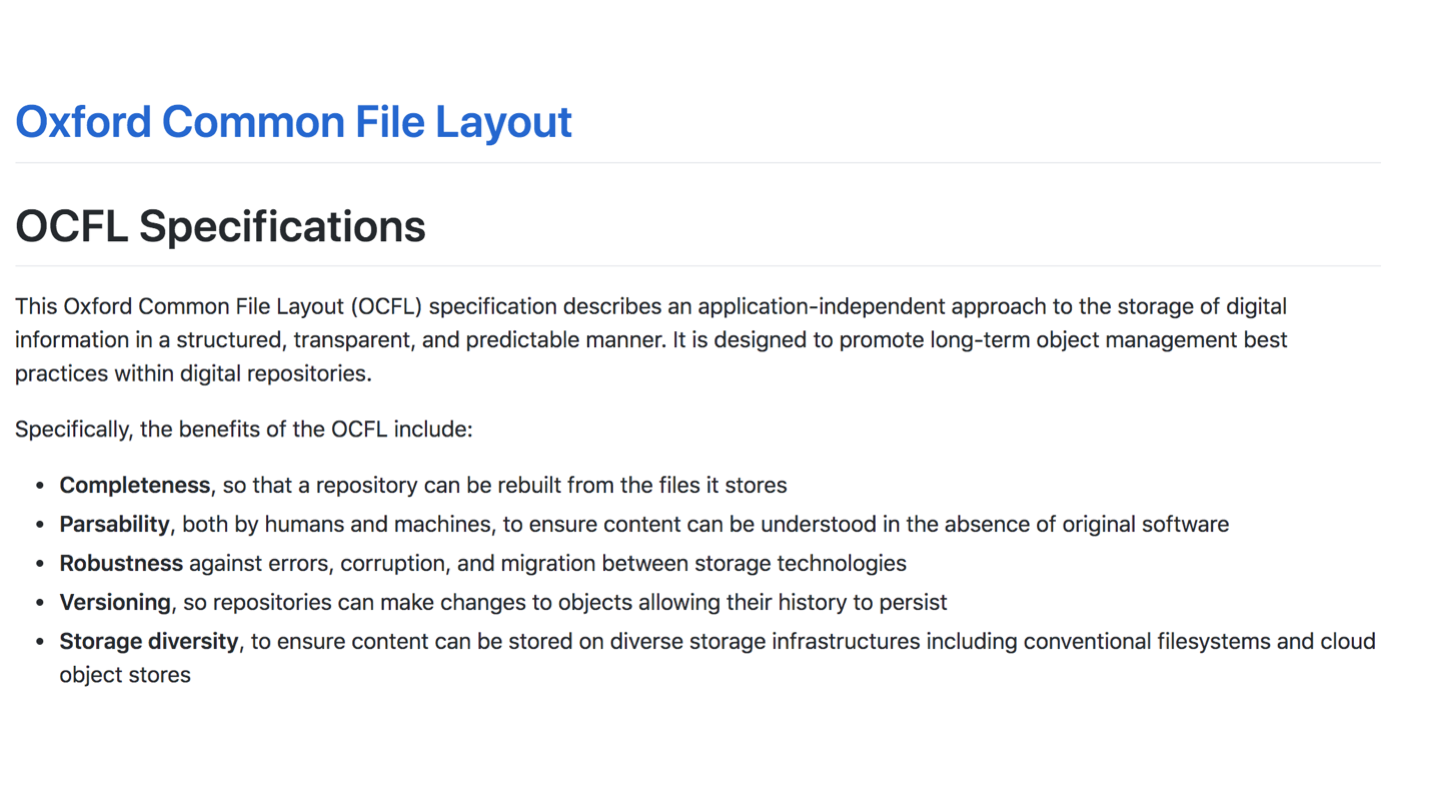

OCFL Specifications

This Oxford Common File Layout (OCFL) specification describes an application-independent approach to the storage of digital information in a structured, transparent, and predictable manner. It is designed to promote long-term object management best practices within digital repositories. Specifically, the benefits of the OCFL include:

Completeness, so that a repository can be rebuilt from the files it stores

Parsability, both by humans and machines, to ensure content can be understood in the absence of original software

Robustness against errors, corruption, and migration between storage technologies

Versioning, so repositories can make changes to objects allowing their history to persist

Storage diversity, to ensure content can be stored on diverse storage infrastructures including conventional filesystems and cloud object stores

Source: https://ocfl.io

Here’s a screenshot of what an OCFL object looks like - it’s a series of versioned directories, each with a detailed inventory.

One of the standards we are using is RO-Crate - for describing research data sets. I presented this at eResearch as well [TODO - link]

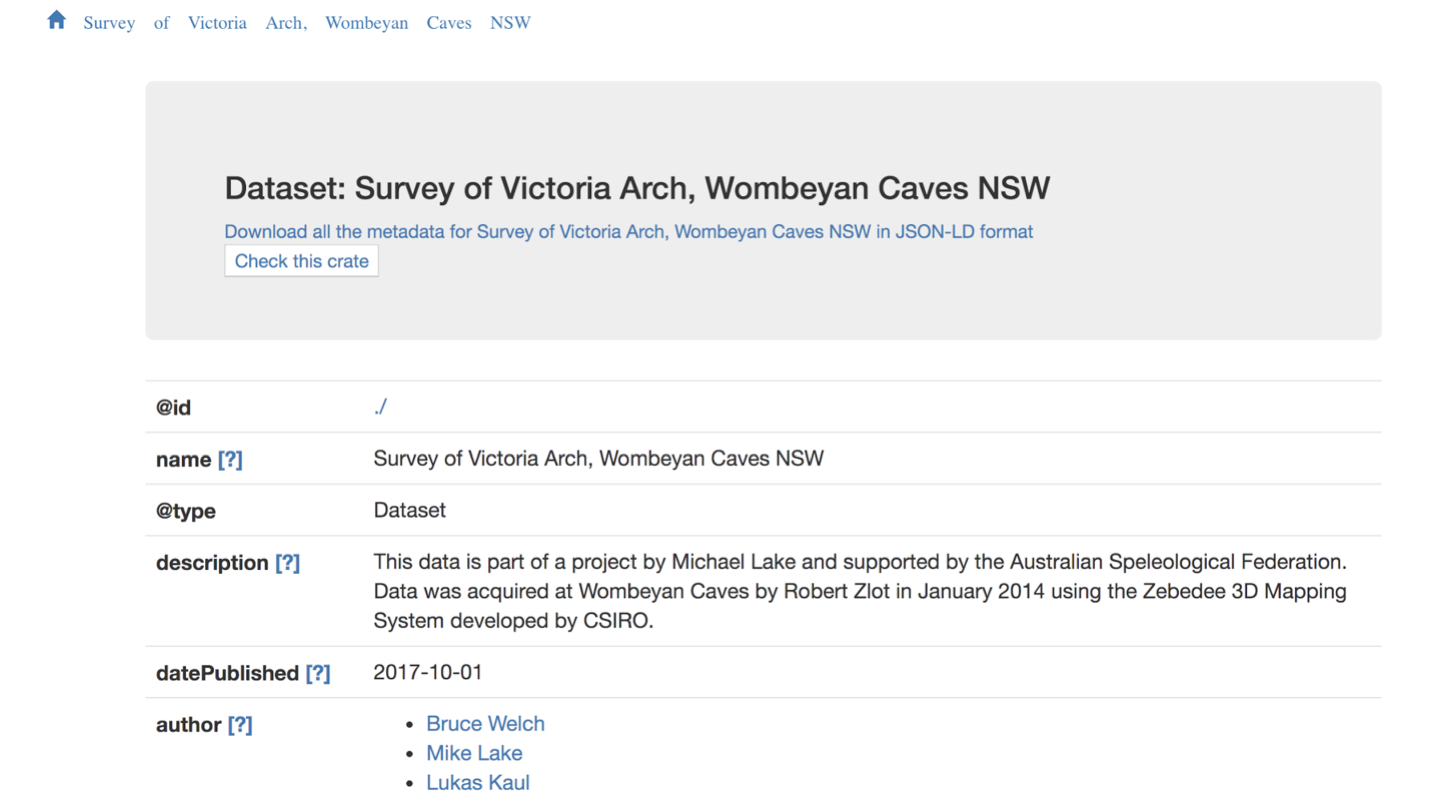

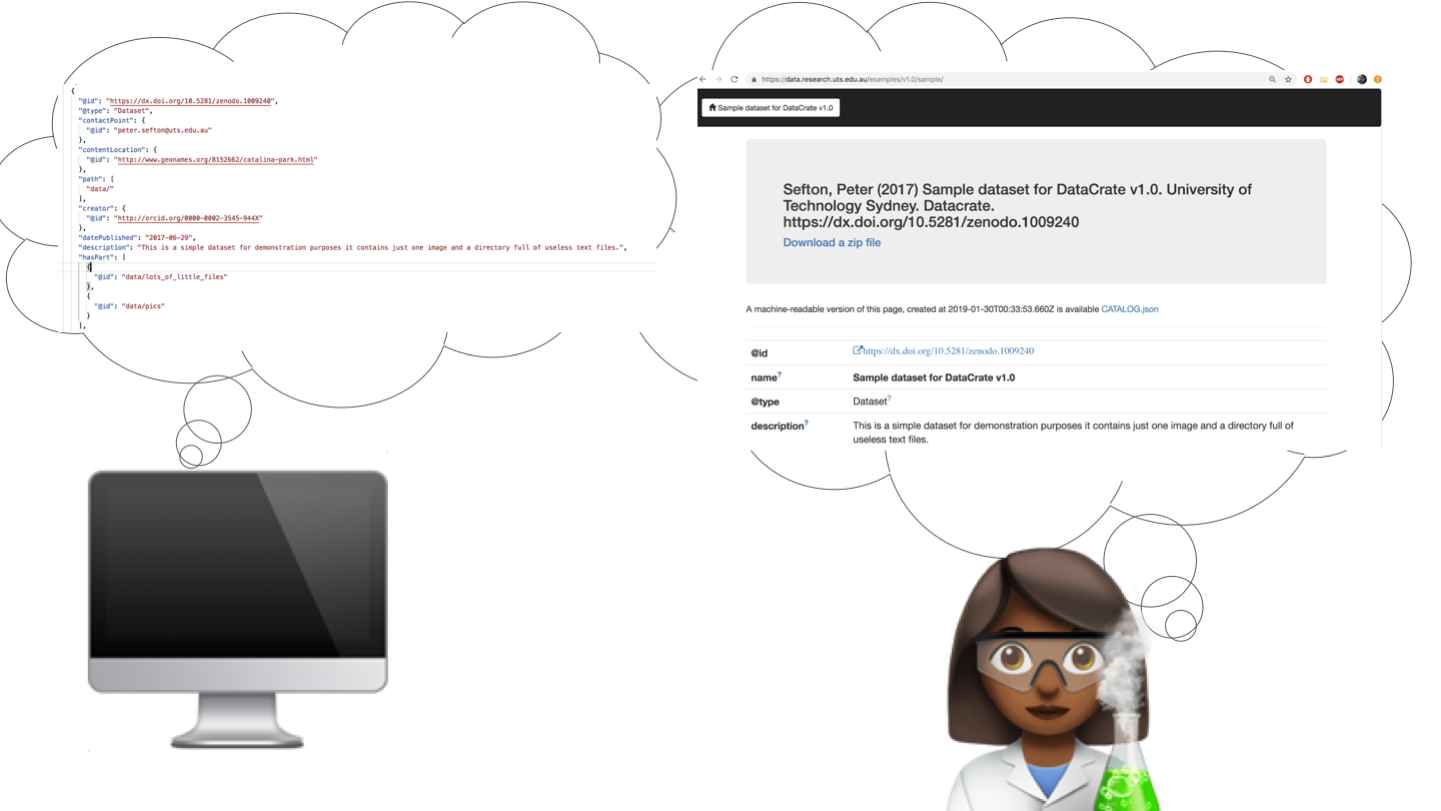

This is an example of an RO-Crate showing that each Crate has a human-readable HTML view as well as a machine readable view.

The two views (human and machine) of the data are equivalent - in fact the HTML version is generated from the JSON-LD version using a tool called CalcyteJS.

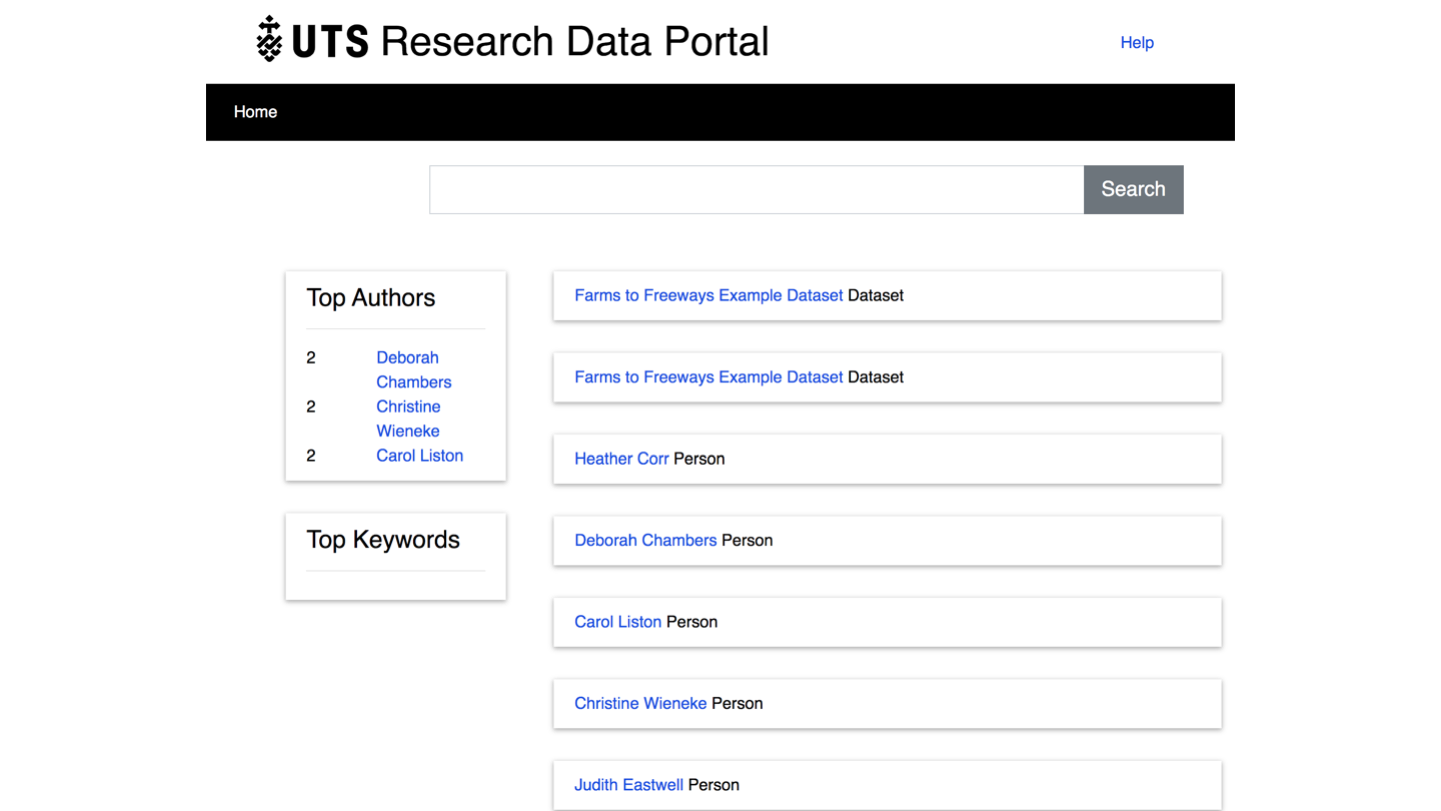

This is a screenshot of work very much in progress - it’s a shows an example of the repository system working at the smallest scale, showing a single collection, “Farms to Freeways”; a social history project from Western Sydney, which we have exported into RO-Crate format as a demonstration. Each of the participants has been indexed for discovery. In a more deployment for a institutional repository, datasets would be indexed at the top level only. The point is to show that this software will be highly configurable.

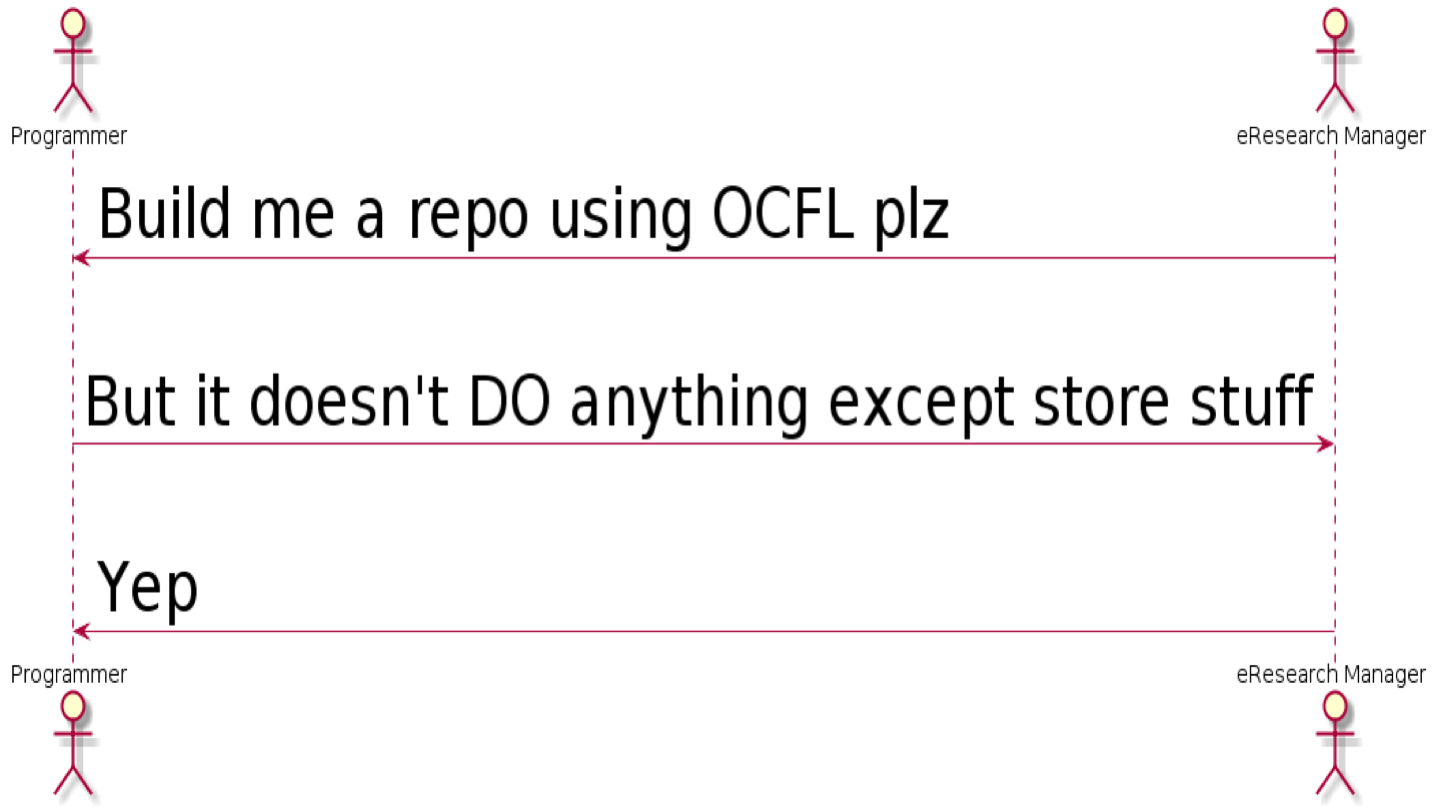

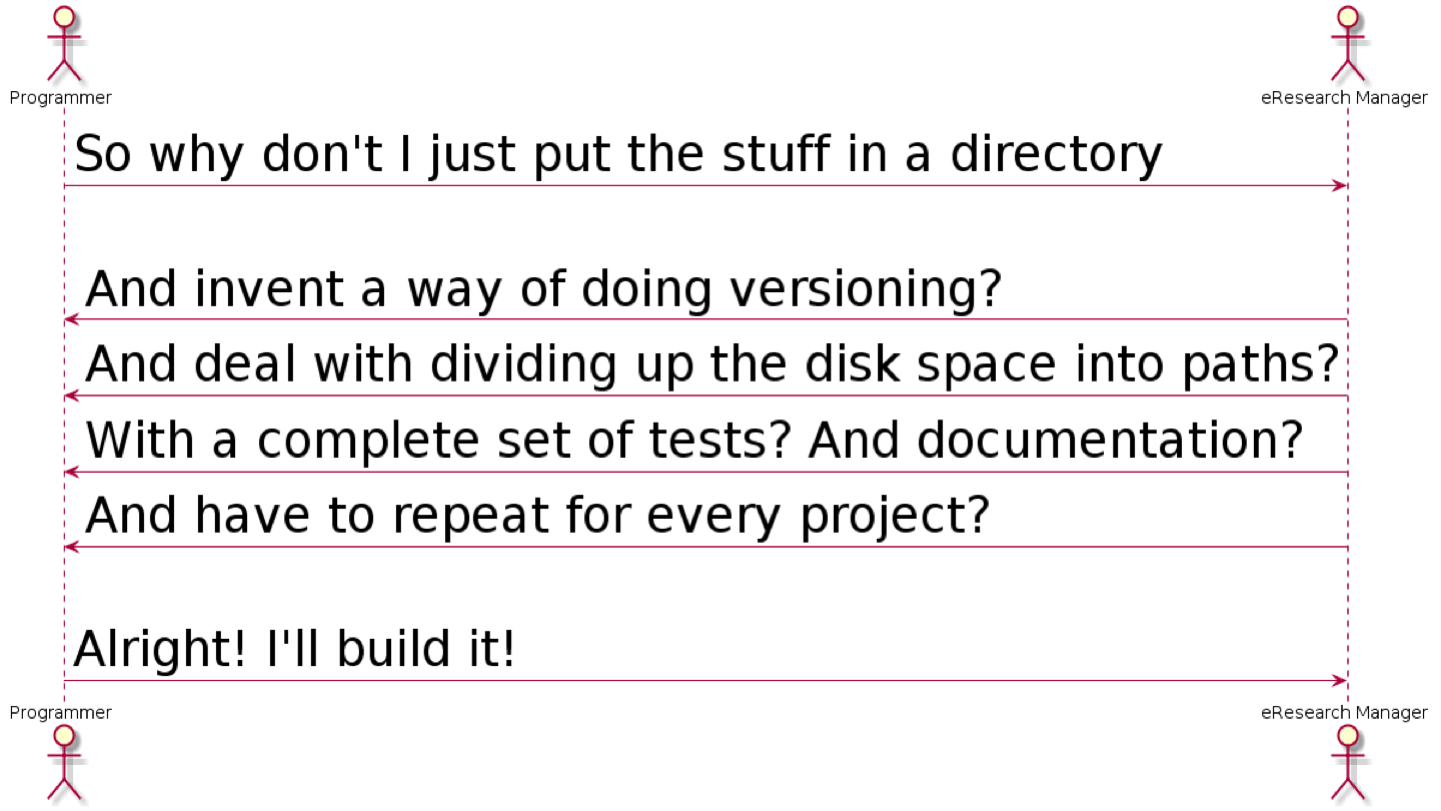

OCFL needs some explaining. I’ve had a couple of conversations with developers where it takes them a little while to get what it’s for.

But they DO get it the standard is well designed.

Solr is an efficient search engine.

nginx is an industry-standard scalable web server, used by companies like DropBox and Netflix

Both are standard, open-source, easy to deploy and keep patched: unlike dedicated data repositories, which tend be fussy and make your server team swear.

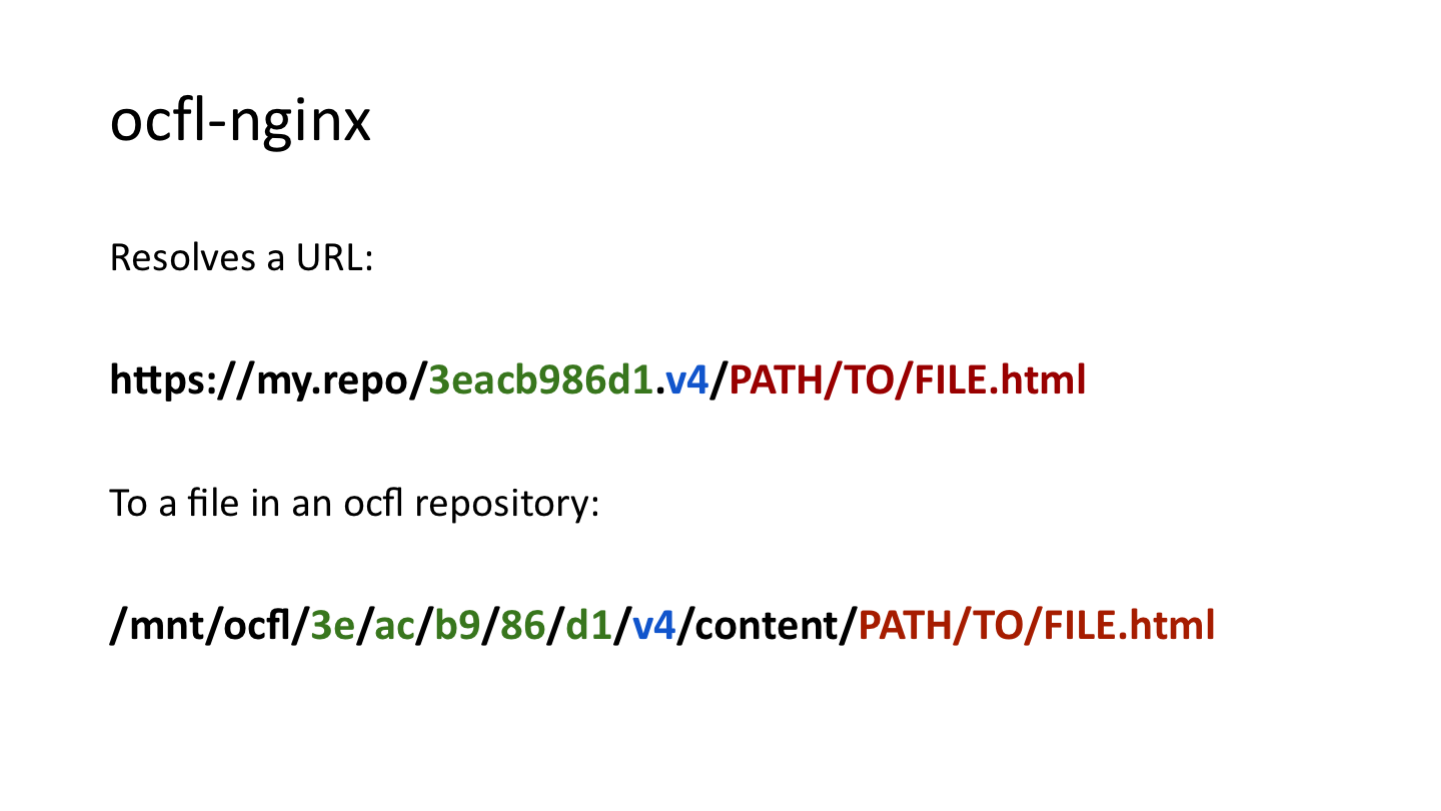

Mapping incoming URLs to the right file in the ocfl repository is straightforward and done with an extension in nginx’s minimal flavour of JavaScript. This slide simplifies things a bit: in real life we have URL ids which solr maps to OIDs and then to a pairtree path.

Todo: we want to use the Memento standard so that clients can request versioned rsources.

We are also looking at versioned DOIs pointing to versioned URLs and resources

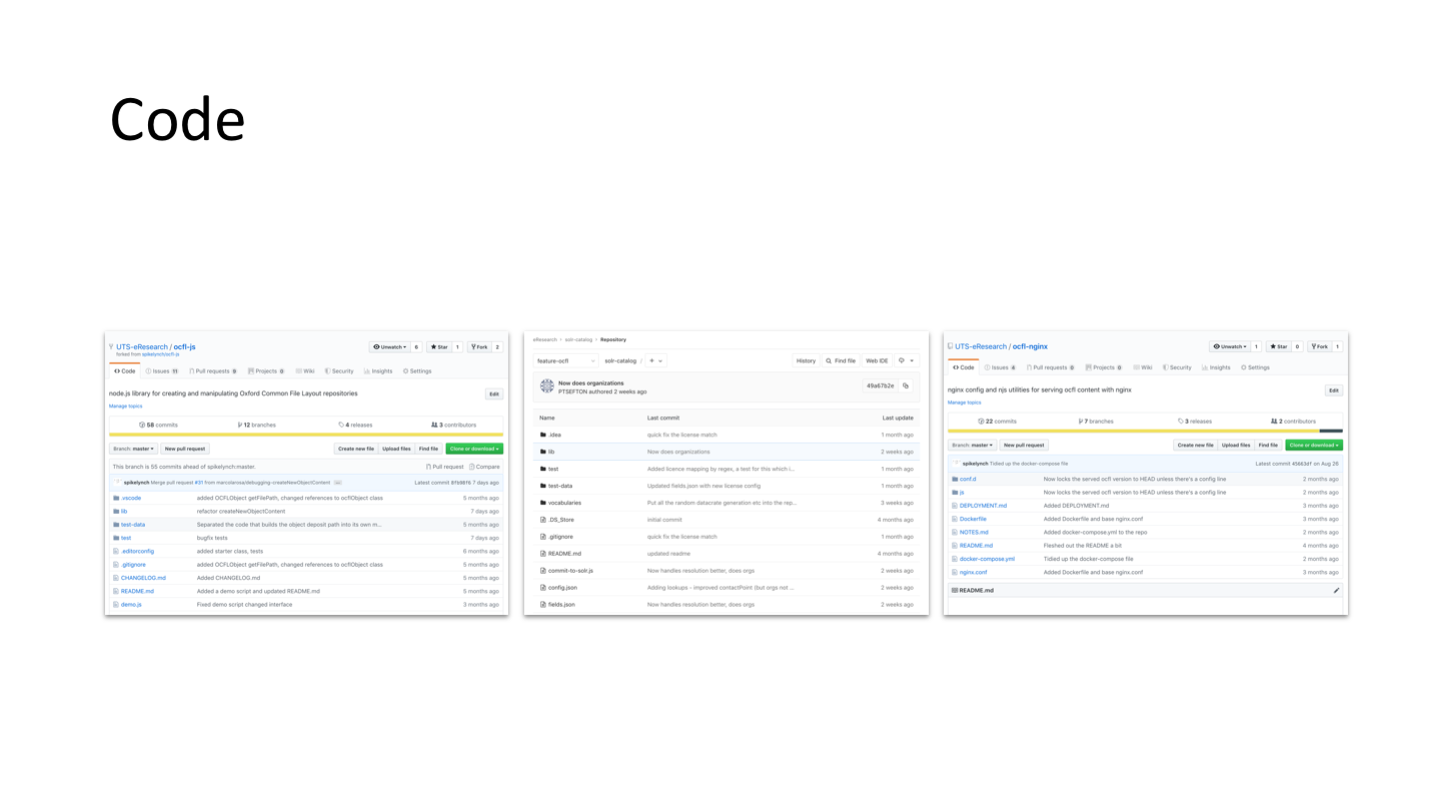

ocfl-js - a Node library for building and updating OCFL repositories ro-crate-js - a Node library for working with RO-Crates ocfl-nginx - an extension to nginx allowing it to serve versioned OCFL content a Docker image for ocfl-nginx solr-catalog - a Node library for indexing an OCFL repository into a Solr index data-portal - a single-page application for searching a Solr index

The codebase is in a lot of places but that’s consistent with the approach - they are all just components which we can deploy as we need them

The nginx extension is very small and would be easy to reimplement against another server

This is the most prototypical / primitive part of what we’ve got so far.

Licences on RO-Crate are indexed in the solr index. nginx authenticates web users, looks up which licences they can access, and applies access control to both search results and payloads.

At the moment, we’ve got a test server which doesn’t authenticate but which only serves datasets with a public licence and denies access to everything else.

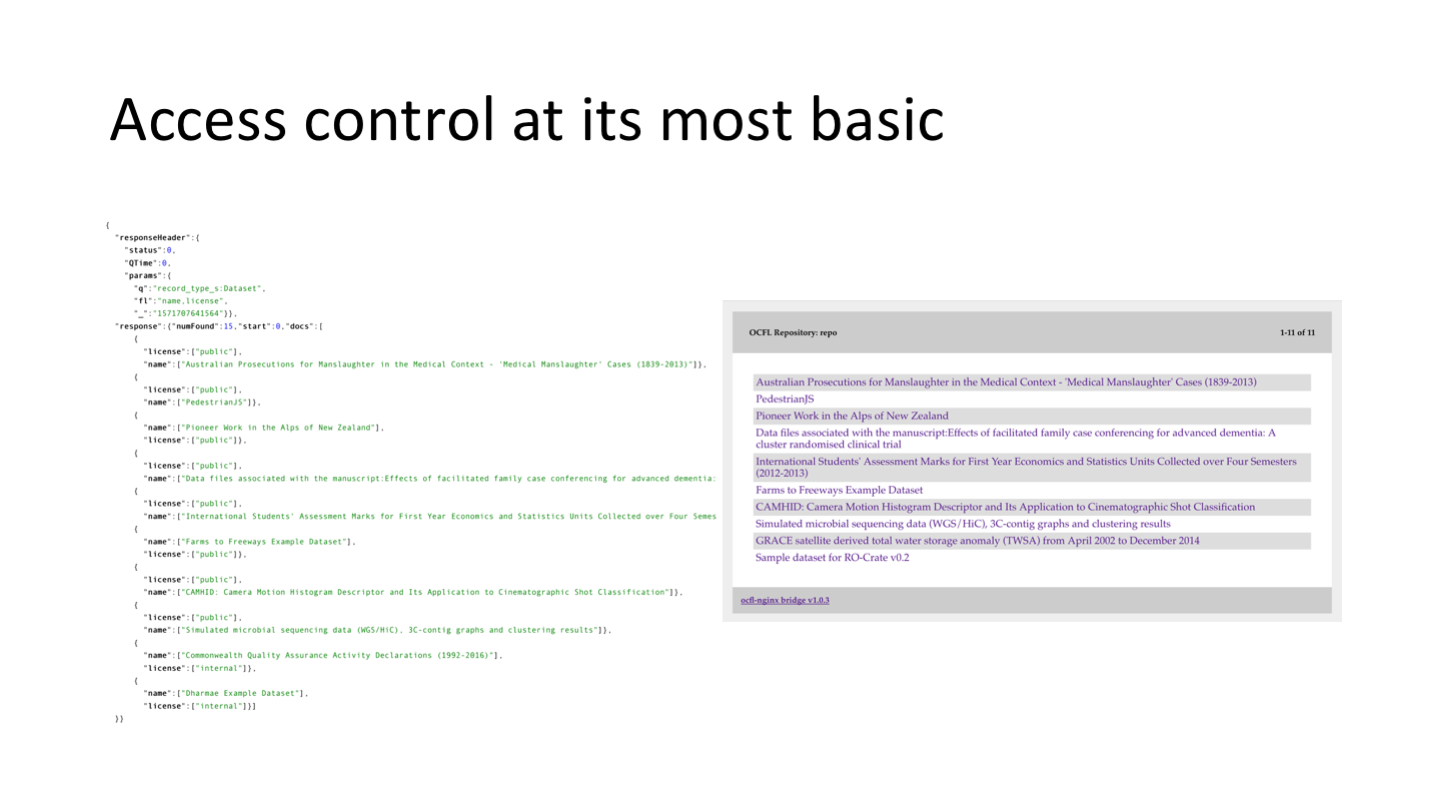

The screenshot on the left is a Solr query showing public and internal licences

The screenshot on the right is a basic web view of what nginx serves to an unauthenticated guest user - datasets with internal licenses aren’t shown

Good data standards make incremental development much easier.

We were able to get real results in one- and two-day workshops with teams from PARADISEC and the State Library of New South Wales, both with large, structured digital humanities collections behind APIs.

Both the OCLF and RO-Crate standards are new and changing, but agile development means that it’s OK and even productive to keep pace with this and feed back into community consultation.

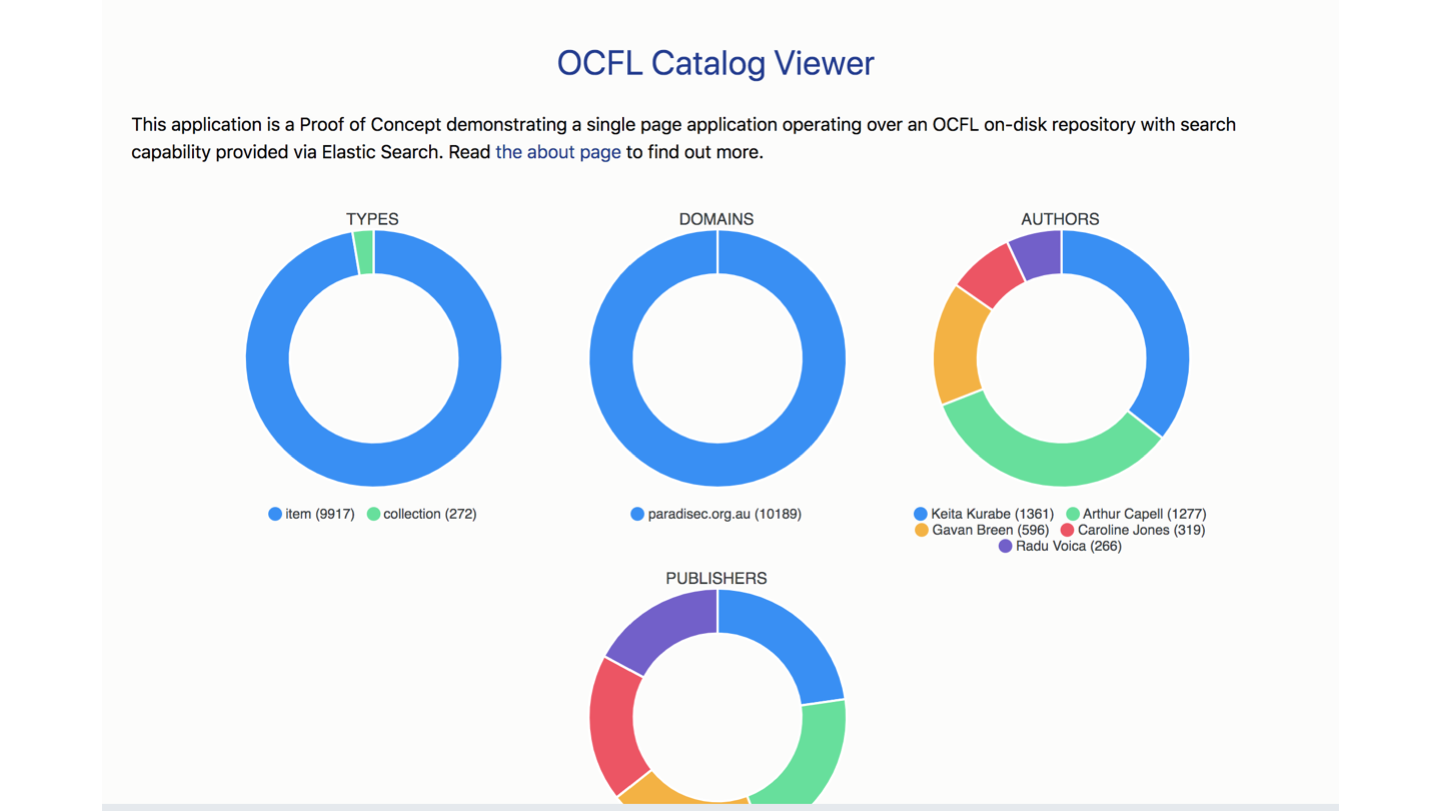

In the last couple of months Marco La Rosa, an independent developer working for PARADISEC, has ported 10,000 data and collection items into RO-Crate format, AND built a portal which can display them. This means that ANY repository with a similar structure Items in Collections could easily re-use the code and the viewers for various file types.

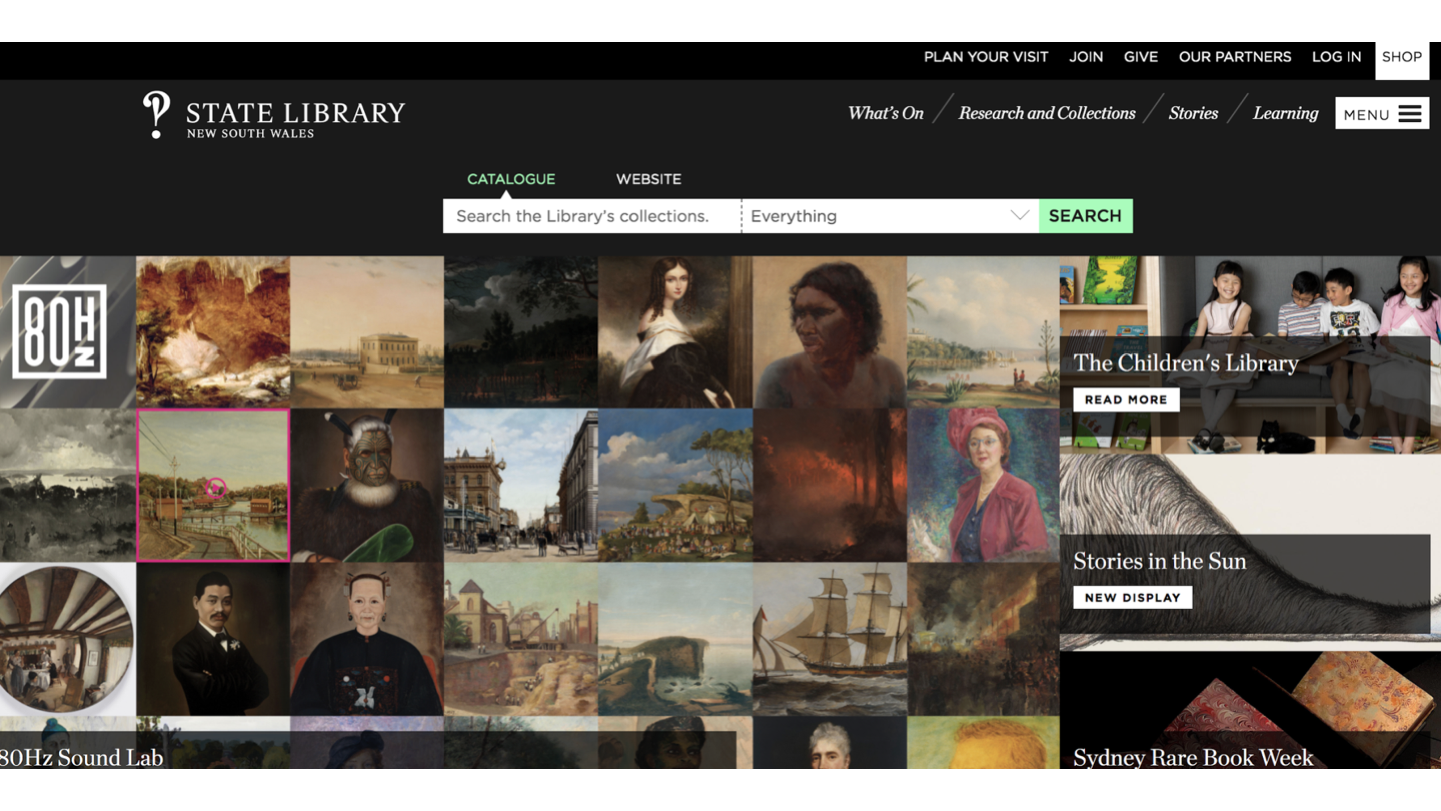

The Mitchell Collection - digitised public domain books with detailed metadata in METS and specialised OCR standards. We spend a day at the State Library and were able to successfully extract books into directories of JPEGs and metadata, package these using RO-Crate and start building an OCFL repository.

This work is licensed under a Creative Commons Attribution 3.0 Australia License.