Towards a (technical architecture for a) HASS Research Data Commons for language and text analysis

2021-10-12

This is a presentation by Peter (Petie) Sefton and Moises Sacal, delivered at the online eResearch Australasia Conference on October 12th 2021.

The presentation was by recorded video - this is a written version. Mosies and I are both employed by the University of Queensland School of Languages and Culture.

Here is the abstract as submitted:

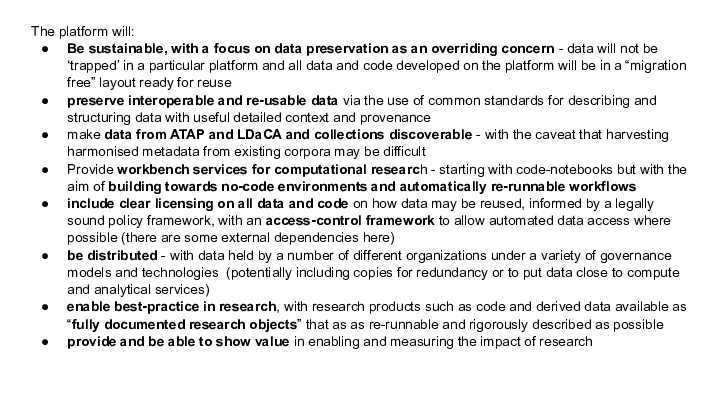

The Language Data Commons of Australia Data Partnerships (LDaCA) and the Australian Text Analytics Platform (ATAP) are building towards a scalable and flexible language data and analytics commons. These projects will be part of the Humanities and Social Sciences Research Data Commons (HASS RDC).

The Data Commons will focus on preservation and discovery of distributed multi-modal language data collections under a variety of governance frameworks. This will include access control that reflects ethical constraints and intellectual property rights, including those of Aboriginal and Torres Strait Islander, migrant and Pacific communities.

The platform will provide workbench services to support computational research, starting with code-notebooks with no-code research tools provided in later phases. Research artefacts such as code and derived data will be made available as fully documented research objects that are re-runnable and rigorously described. Metrics to demonstrate the impact of the platform are projected to include usage statistics, data and article citations.

In this presentation we will present the proposed architecture of the system, the principles that informed it and demonstrate the first version. Features of the solution include the use of the Arkisto Platform (presented at eResearch 2020), which leverages the Oxford Common File Layout. This enables storing complete version-controlled digital objects described using linked data with rich context via the Research Object Crate (RO-Crate) format. The solution features a distributed authorization model where the agency archiving data may be separate from that authorising access.

This cluster of projects is led by Professor Michael Haugh of the School of Languages and Culture at the University of Queensland with several partner institutions.

I work on Gundungurra and Darug land in the Blue Mountains, Moises is on the land of the Gadigal peoples of the Eora Nation. We would like to show acknowledge the traditional custodians of the lands on which we live and work and the importance of indigenous knowledge, culture and language to the these projects.

This work is supported by the Australian Research Data Commons.

We are going to talk about the emerging architecture and focus in on one very important part of it: Access control. 🔏

But first, some background.⛰️

The architecture for the Data Commons project is informed by as set of goals and principles starting with ensuring that important data assets have the best chance of persisting into the future.

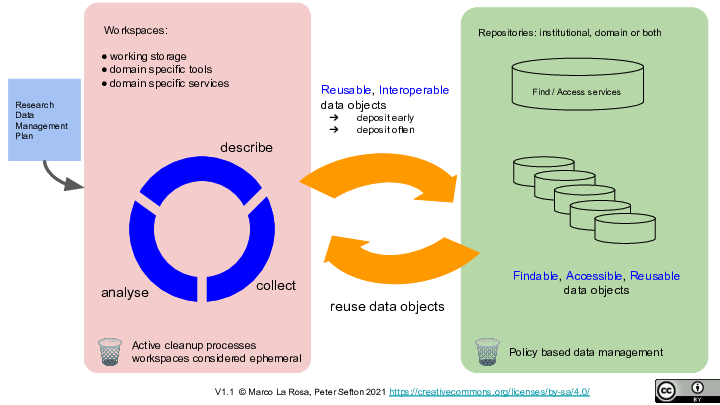

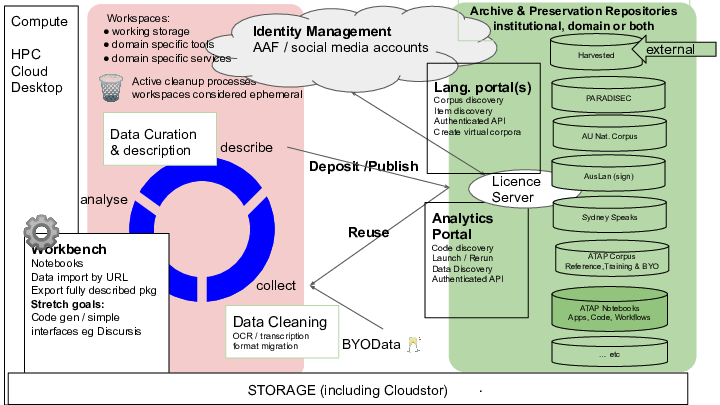

The diagram which we developed with Marco La Rosa makes a distinction between managed repository storage and the places where work is done - “workspaces”. Workspaces are where researchers collect, analyse and describe data. Examples include the most basic of research IT services, file storage as well as analytical tools such as Jupyter notebooks (the backbone of ATAP - the text analytics platform). Other examples of workspaces include code repositories such as GitHub or GitLab (a slightly different sense of the word repository), survey tools, electronic (lab) notebooks and bespoke code written for particular research programmes - these workspaces are essential research systems but usually are not set up for long term management of data. The cycle in the centre of this diagram shows an idealised research practice where data are collected and described and deposited into a repository frequently. Data are made findable and accessible as soon as possible and can be “re-collected” for use and re-use.

For data to be re-usable by humans and machines (such as ATAP notebook code that consumes datasets in a predictable way) it must be well described. The ATAP and LDaCA approach to this is to use the Research Object Crate (RO-Crate) specification. RO-Crate is essentially a guide to using a number of standards and standard approaches to describe both data and re-runnable software such as workflows or notebooks.

In the context of the previous high-level map distinguishing workspaces and repository services, we are using the Arkisto Platform (introduced at eResearch 2020).

Arkisto is an approach to eResearch service that places the emphasis on ensuring the long term preservation of data independently of code and services - recognizing the ephemeral nature of software.

An example of a corpus is the PARADISEC collection - Pacific and Regional Archive for Digital Sources in Endangered Cultures

PARADISEC has viewers for various content types: video and audio with time aligned transcriptions, image set viewers and document viewers (xml, pdf and microsoft formats). We are working on making these viewers available across Arkisto sites by having a standard set of hooks for adding viewer plugins to a site as needed.

This slide captures the overall high-level architecture - there will be an analytical workbench (left of the diagram) which is the basis of the Australian Text Analytics (ATAP) project - this will focus on notebook-style programming using one of the emerging Jupyter notebook platforms in that space. The exact platform is not 100% decided yet, but that has not stopped the team from starting to collect and develop notebooks that open up text analytics to new coders from the linguistics community. Our engagement lead, Dr Simon Musgrave sees the ATAP work as primarily an educational enterprise - which will be underpinned by services built on the Arkisto standards that allow for rigorous, re-runnable research.

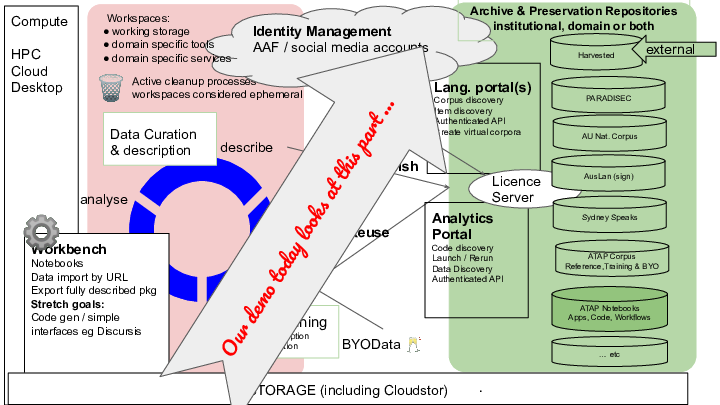

Today we will look in detail at one important part of this architecture - access control. How can we make sure that in a distributed system, with multiple data repositories and registries residing with different data custodians, the right people have access to the right data?

I didn’t spell this out in the recorded conference presentation, but for data that resides in the repositories at the right of the diagram we want to encourage research processes that clearly separate data from code. Notebooks and other code workflows that use data will fetch a version-controlled reference copy from a repository - using an access key if needed, process the data and produce results that are then deposited into an appropriate repository alongside the code itself. Given that a lot of the data in the language world is NOT available under open licenses such as Creative Commons it is important to establish this practice - each user of the data must negotiate or be granted access individually. Research can still be reproducible using this model, but without a culture of sharing datasets without regard for the rights of those who were involved in the creations of the data.

Regarding rights, our project is informed by the CARE principles for indegenous data.

The current movement toward open data and open science does not fully engage with Indigenous Peoples rights and interests. Existing principles within the open data movement (e.g. FAIR: findable, accessible, interoperable, reusable) primarily focus on characteristics of data that will facilitate increased data sharing among entities while ignoring power differentials and historical contexts. The emphasis on greater data sharing alone creates a tension for Indigenous Peoples who are also asserting greater control over the application and use of Indigenous data and Indigenous Knowledge for collective benefit

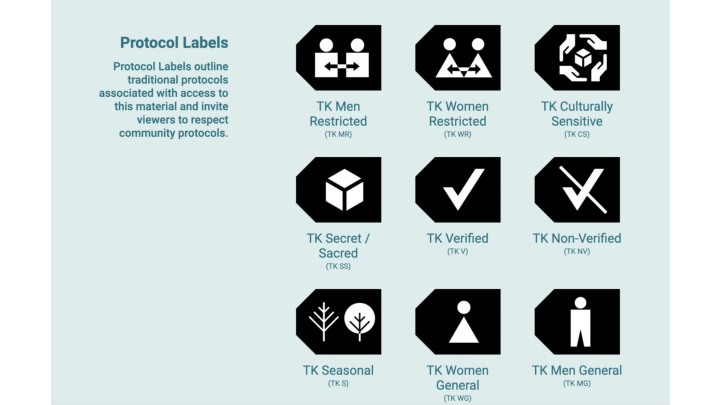

https://localcontexts.org/labels/traditional-knowledge-labels/

We are designing the system so that it can work with diverse ways of expressing access rights, for example licensing like the Tribal Knowledge labels.The idea is to separate safe storage of data with a license on each item, which may reference the TK labels from a system that is administered by the data custodians who can make decisions about who is allowed to access data.

We are working on a case-study with the Sydney Speaks project via steering committee member Professor Catherine Travis.

This project seeks to document and explore Australian English, as spoken in Australia’s largest and most ethnically and linguistically diverse city – Sydney. The title “Sydney Speaks” captures a key defining feature of the project: the data come from recorded conversations between Sydney siders, as they tell stories about their lives and experiences, their opinions and attitudes. This allows us to measure how their lived experiences impact their speech patterns. Working within the framework of variationist sociolinguistics, we examine variation in phonetics, grammar and discourse, in an effort to answer questions of fundamental interest both to Australian English, and language variation and change more broadly, including:

- How has Australian English as spoken in Sydney changed over the past 100 years?

- Has the change in the ethnic diversity over that time period (and in particular, over the past 40 years) had any impact on the way Australian English is spoken?

- What affects the way variation and change spread through society

- Who are the initiators and who are the leaders in change?

- How do social networks function in a modern metropolis?

- What social factors are relevant to Sydney speech today, and over time (gender? class? region? ethnic identity?) A better understanding of what kind of variation exists in Australian English, and of how and why Australian English has changed over time can help society be more accepting of speech variation and even help address prejudices based on ways of speaking. Source: http://www.dynamicsoflanguage.edu.au/sydney-speaks/

The collection contains recordings of people speaking both contemporary and historic.

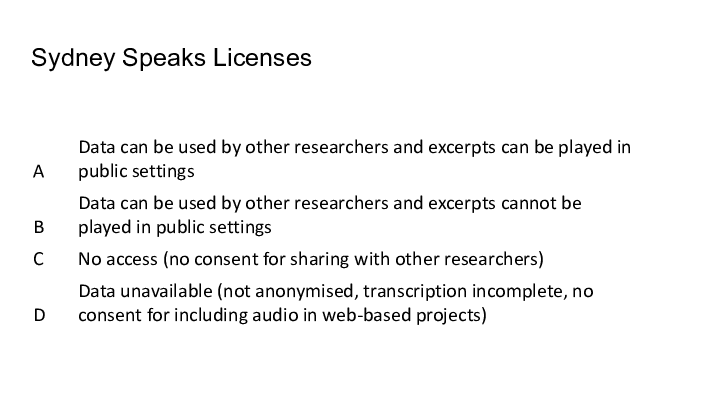

Because this involved human participants there are restrictions on the distribution of data - a situation we see with lots of studies involving people in a huge range of disciplines.

There are four tiers of data access we need to enforce and observe for this data based on the participant agreements and ethics arrangements under which the data were collected.

Concerns about rights and interests are important for any data involving people - and a large amount the data both indigenous and non-indigenous we are using will require access control that ensures that data sharing is appropriate.

In this example demo we uploaded various collections and are authorising with Github organisations

In a our production release we will use AAF to authorise different groups

Let's find a dataset: The Sydney Speaks Corpus

As you can see we cannot see any data

Lets login… We authorise Github…

Now you can see we have access sub corpus data and I am just opening a couple of items

—

Now in Github we can see the group management example.

I have given access to all the licences to myself, as you can see here and given access to licence A to others.

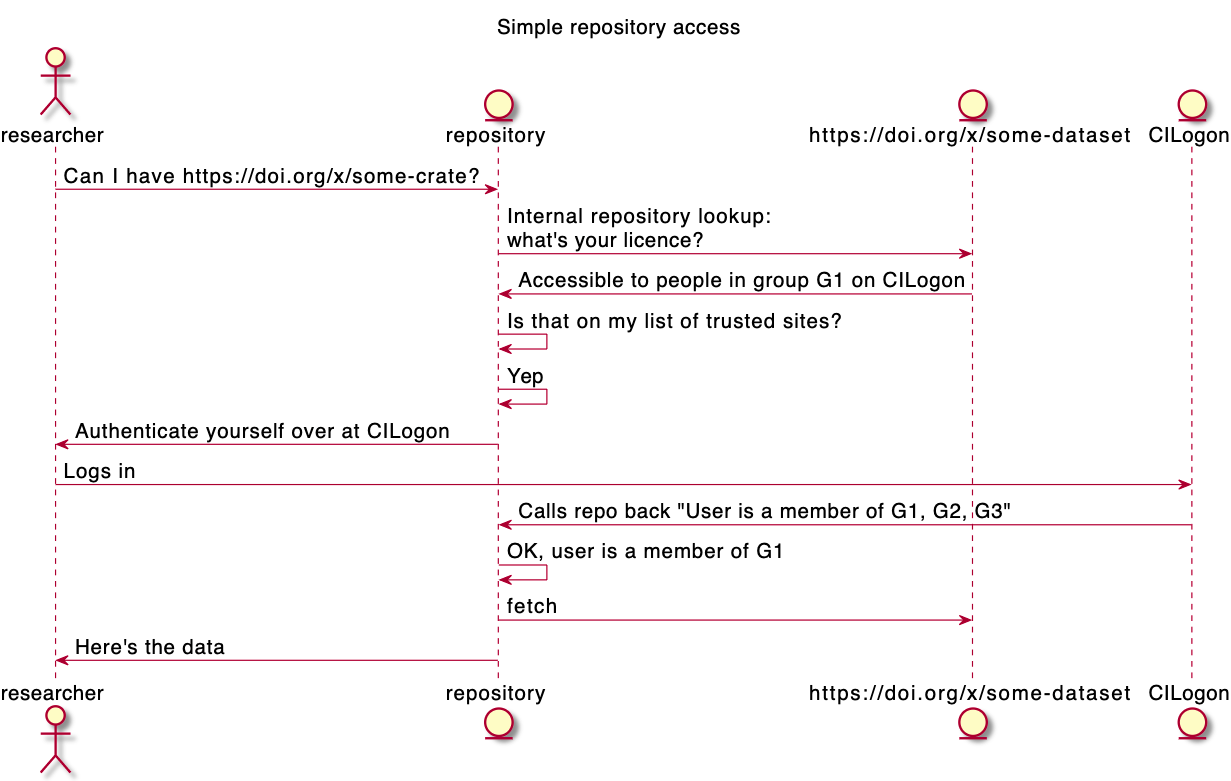

This diagram is a sketch of the interaction that took place in the demo - it shows how a repository can delegate authorization to an external system - in this case Github rather than CILogon. But we are working with the ARDC to set up a trial with the Australian Access Federation to allow CILogon access for the HASS Research Data Commons so we can pilot group-based access control.

NOTE: This diagram has been updated slightly from the version presented at the conference to make it clear that the lookup to find the licence for the data set is internal to the repository - the id is a DOI but it is not being resolved over the web.

In this presentation, about work which is still very much under construction, we have:

- Shown an overview of a complete Data Commons Architecture

- Previewed a distributed access-control mechanism which will separates out the the job of storing and delivering data from that of authorising access

- We'll be back next year with more about how analytics and data repositories connect using structure and linked data.